# Extract unique stations with coordinates

stations <- indego %>%

distinct(start_station, start_lat, start_lon)

# Create complete hourly time sequence

time_sequence <- data.frame(

interval60 = seq(

from = min(indego$interval60),

to = max(indego$interval60),

by = "hour"

)

)

# Generate full panel structure (all station-hour combinations)

study_panel <- expand.grid(

start_station = stations$start_station,

interval60 = time_sequence$interval60

) %>%

# Add station coordinates

left_join(stations, by = "start_station") %>%

# Add actual trip counts

left_join(

indego %>%

count(start_station, interval60, name = "trips"),

by = c("start_station", "interval60")

) %>%

# Replace NA with 0 (no trips in that hour)

mutate(trips = replace_na(trips, 0))

# Add temporal features to panel

study_panel <- study_panel %>%

mutate(

week = week(interval60),

month = month(interval60, label = TRUE),

dotw = wday(interval60, label = TRUE),

hour = hour(interval60),

date = as.Date(interval60),

weekend = ifelse(dotw %in% c("Sat", "Sun"), 1, 0),

rush_hour = ifelse(hour %in% c(7, 8, 9, 16, 17, 18), 1, 0),

# Create time of day categories

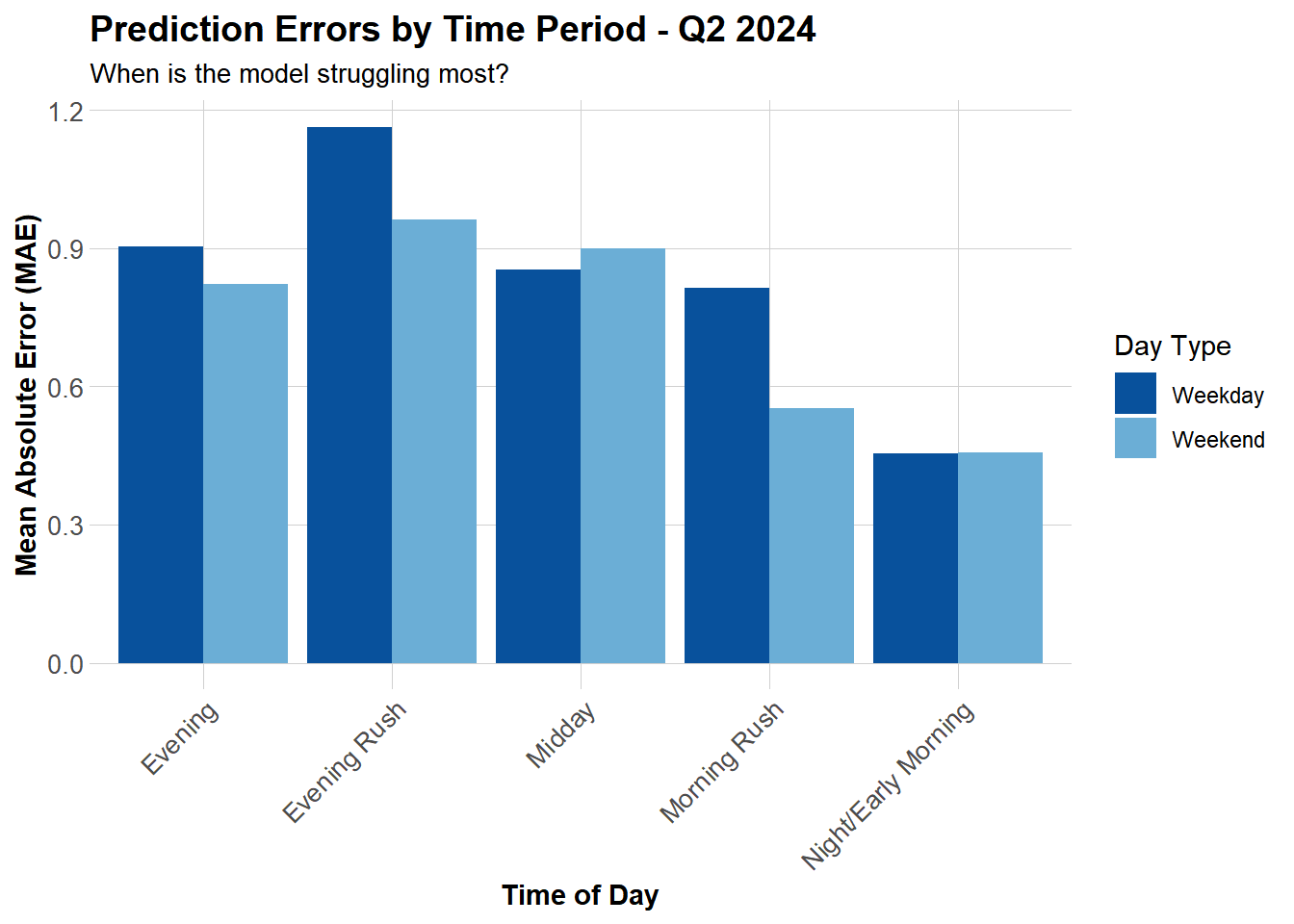

time_of_day = case_when(

hour >= 6 & hour < 9 ~ "Morning Rush",

hour >= 9 & hour < 16 ~ "Midday",

hour >= 16 & hour < 19 ~ "Evening Rush",

hour >= 19 & hour < 22 ~ "Evening",

TRUE ~ "Night/Early Morning"

)

)

# Display panel structure summary

panel_summary <- data.frame(

Metric = c("Number of Observations",

"Number of Stations",

"Number of Time Periods",

"Average Trips per Station-Hour",

"Median Trips per Station-Hour",

"Total Trips in Panel"),

Value = c(

format(nrow(study_panel), big.mark = ","),

length(unique(study_panel$start_station)),

length(unique(study_panel$interval60)),

round(mean(study_panel$trips), 2),

median(study_panel$trips),

format(sum(study_panel$trips), big.mark = ",")

)

)

kable(panel_summary,

caption = "Panel Data Structure Summary",

col.names = c("Metric", "Value")) %>%

kable_styling(bootstrap_options = c("striped", "hover"),

full_width = FALSE)